Have you ever wanted to know about Generative Adversarial Networks (GANs)? Maybe you just want to catch up on the topic? Or maybe you simply want to see how these networks have been refined over these last years? Well, in these cases, this post might interest you!

What this post is not about

First things first, this is what you

won’t

find in this post:

-

Complex technical explanations

-

Code (there are links to code for those interested, though)

-

An exhaustive research list (you can already find it

here

)

What this post is about

-

A summary of relevant topics about GANs

-

A lot of links to other sites, posts and articles so you can decide where to focus on

Index

-

Understanding GANs

-

GANs: the evolution

-

DCGANs

-

Improved DCGANs

-

Conditional GANs

-

InfoGANs

-

Wasserstein GANs

-

Closing

If you are familiar with GANs you can probably

skip

this section.

If you are reading this, chances are that you have heard GANs are pretty promising. Is the hype justified? This is what Yann LeCun, director of Facebook AI, thinks about them:

“Generative Adversarial Networks is the most interesting idea in the last ten years in machine learning.”

I personally think that GANs have a huge potential but we still have a lot to figure out.

So, what are GANs? I’m going to describe them very briefly. In case you are not familiar with them and want to know more, there are a lot of great sites with good explanations. As a personal recommendation, I like the ones from

Eric Jang

and

Brandon Amos

.

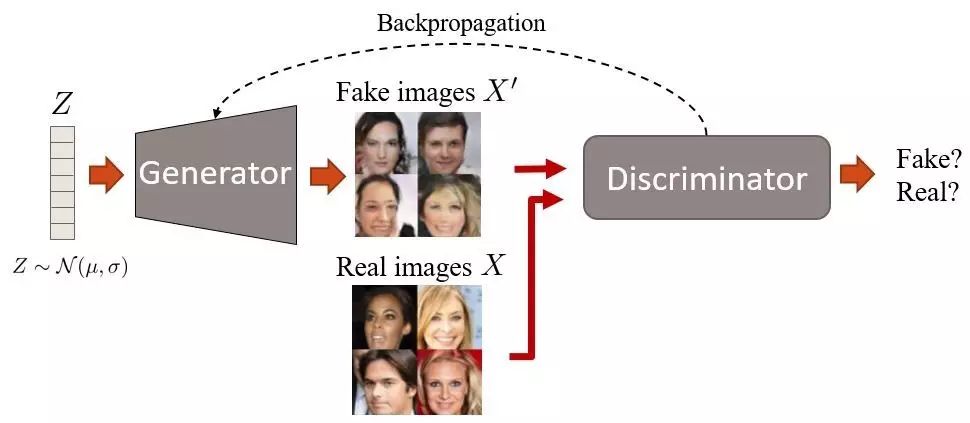

GANs — originally proposed by

Ian Goodfellow

— have two networks, a generator and a discriminator. They are both trained at the same time and compete again each other in a minimax game. The generator is trained to fool the discriminator creating realistic images, and the discriminator is trained not to be fooled by the generator.

GAN training overview.

At first, the generator generates images. It does this by sampling a vector noise Z from a simple distribution (e.g. normal) and then upsampling this vector up to an image. In the first iterations, these images will look very noisy. Then, the discriminator is given fake and real images and learns to distinguish them. The generator later receives the “feedback” of the discriminator through a backpropagation step, becoming better at generating images. At the end, we want that the distribution of fake images is as close as possible to the distribution of real images. Or, in simple words, we want fake images to look as plausible as possible.

It is worth mentioning that due to the minimax optimization used in GANs, the training might be quite unstable. There are some

hacks

, though, that you can use for a more robust training.

链接:

http://guimperarnau.com/blog/2017/03/Fantastic-GANs-and-where-to-find-them

原文链接:

http://weibo.com/1402400261/EALoTcZWg?from=page_1005051402400261_profile&wvr=6&mod=weibotime&type=comment#_rnd1490094107765