机器学习:使用R语言的特征

工程总结。特征工程在建立良好的预测模型方面至关重要。 了解用于分析的数据很重要。 所选特征的特征是确定良好的训练模型。

Feature Engineering is paramount in building a good predictive model. It’s significant to obtain a deep understanding of the data that is being used for analysis. The characteristics of the selected features are definitive of a good training model.

Why is Feature Engineering important?

-

Too many features or redundant features could increase the run time complexity of training the model. In some cases, it could lead to Over Fitting as the model might attempt to fit the data too perfectly.

-

Understanding and visualizing the data and how certain features are more important than the others helps in reducing overall noise and improving the accuracy of the system.

-

Defying the Curse of Dimensionality – ensuring not to increase the number of features above the threshold that would only degrade model performance.

-

Not all features are numeric, so some kind of transformation is required. (e.g. creating the feature vector from textual data)

This blog aims to go through a few well-known methods for selecting, filtering or constructing new features in a quick R way as opposed to helping you understand the theoretical mathematics or statistics behind it. Note that this is by no means an exhaustive list of methods and I try to keep the concepts crisp and to the point.

How to categorize Feature Engineering?

Here I approach them as the follows:

-

Feature Filters:

selecting a subset of relevant features from the feature set.

-

Wrapper Method:

evaluates different feature space based on some criteria to pick the best possible subset.

-

Feature Extraction and Construction:

extracting and creating new features from existing features.

-

Embedded Methods:

feature selection that is performed as a part of the training process.

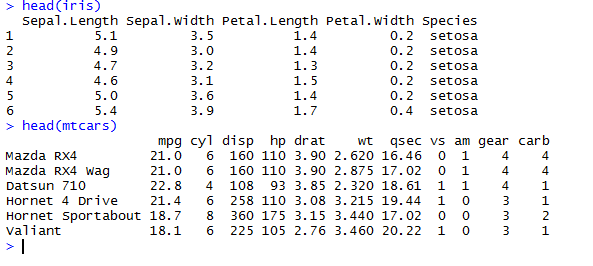

For most of the examples, the default data set in R is used. To quickly list all the data sets available to you from R:

> data()

We will either use mtcars or iris data sets for most of the examples. As most of these data sets are very small, some of the below examples could exhibit over-fitting, fixing it is not in the scope of this blog.

Feature set of iris and mtcars:

Feature Filters

Filter methods are used to select a subset of the existing features based on certain criteria. Features chosen by filters are generic to the feature variables and are not tuned to any specific machine learning algorithm that is used. Filter methods can be used to pre-process the data to select meaningful features and reduce the feature space and avoid over-fitting, especially when there is a small number of data points when compared to the number of features.