Welcome to Part 2 of our tour through modern machine learning

algorithms. In this part, we’ll cover methods for Dimensionality

Reduction, further broken into Feature Selection and Feature Extraction.

In general, these tasks are rarely performed in isolation. Instead,

they’re often preprocessing steps to support other tasks.If you missed Part 1, you can check it out here. It explains our methodology for categorization algorithms, and it covers the “Big 3” machine learning tasks:

Regression

Classification

Clustering

In this part, we’ll cover:

Feature Selection

Feature Extraction

We will also cover other tasks, such as Density Estimation and Anomaly Detection, in dedicated guides in the future.

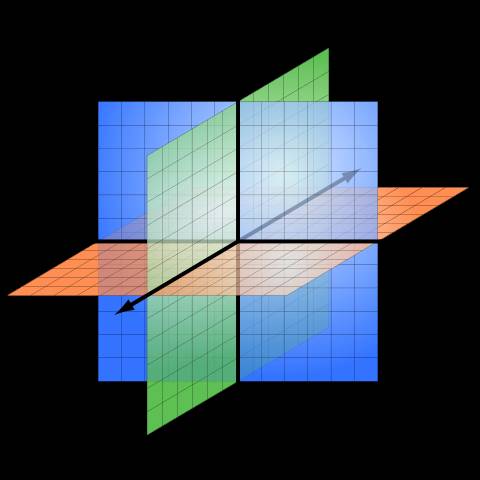

The Curse of Dimensionality

In machine learning, “dimensionality” simply refers to the number of features (i.e. input variables) in your dataset.

When the number of features is very large relative to the number of observations in your dataset, certain algorithms struggle to train effective models. This is called the “Curse of Dimensionality,” and it’s especially relevant for clustering algorithms that rely on distance calculations.

A Quora user has provided an excellent analogy for the Curse of Dimensionality, which we'll borrow here:

Let's say you have a straight line 100 yards long and you

dropped a penny somewhere on it. It wouldn't be too hard to find. You

walk along the line and it takes two minutes.

Now let's say you have a square 100 yards on each side and you

dropped a penny somewhere on it. It would be pretty hard, like searching

across two football fields stuck together. It could take days.

Now a cube 100 yards across. That's like searching a 30-story building the size of a football stadium. Ugh.

The difficulty of searching through the space gets a lot harder as you have more dimensions.

In this guide, we'll look at the 2 primary methods for reducing dimensionality: Feature Selection and Feature Extraction.

链接;

https://elitedatascience.com/dimensionality-reduction-algorithms

原文链接:

http://weibo.com/1402400261/F5OPo9EmX?from=page_1005051402400261_profile&wvr=6&mod=weibotime&type=comment