数据挖掘入门与实战 公众号: datadw

Dataset

每年高中生和大学生都会申请进入到各种各样的高校中去。每个学生都有一组唯一的考试分数,成绩和背景数据。录取委员会根据这个数据决定是否接受这些申请者。在这种情况下一个二元分类算法可用于接受或拒绝申请,逻辑回归是个不错的方法。

gre -(入学考试成绩)

gpa - (累积平均绩点)

admit - 适合被录取 0或1

Use Linear Regression To Predict Admission

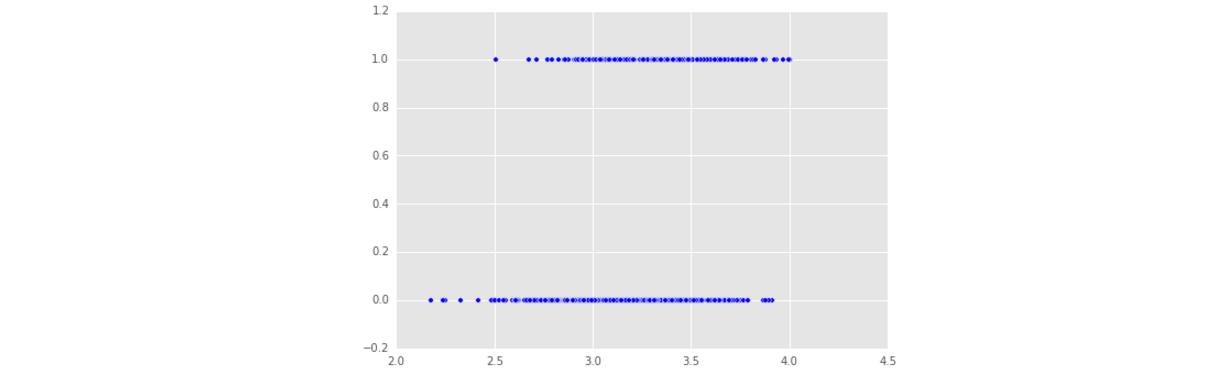

这是原本的数据,admit的值是0或者1。可以发现”gpa”和”admit”并没有线性关系,因为”admit”只取两个值

import pandas

import matplotlib.pyplot as plt

admissions = pandas.read_csv("admissions.csv")

plt.scatter(admissions["gpa"], admissions["admit"])

plt.show()

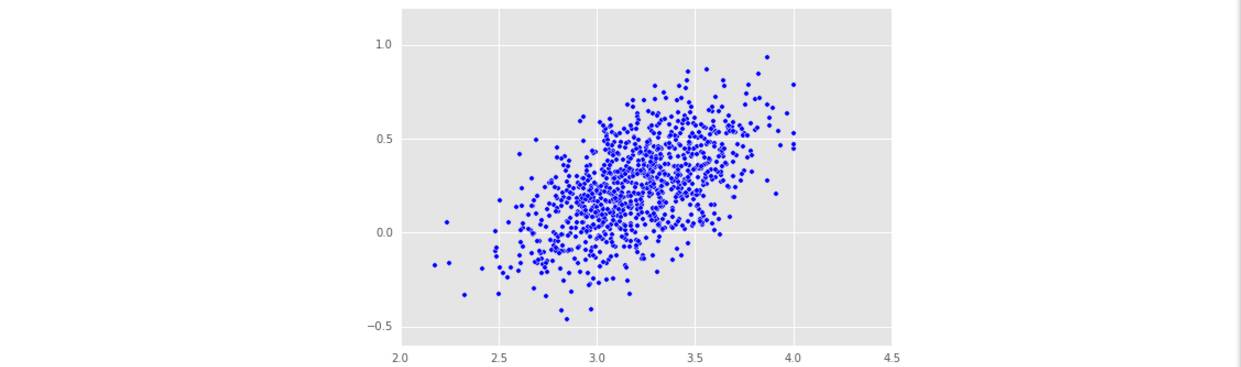

这是通过线性回归模型预测的admit的值,发现admit_prediction 取值范围较大,有负值,不是我们想要的。

# The admissions DataFrame is in memory

# Import linear regression class

from sklearn.linear_model import LinearRegression

# Initialize a linear regression model

model = LinearRegression()

# Fit model

model.fit(admissions[['gre', 'gpa']], admissions["admit"])

# Prediction of admission

admit_prediction = model.predict(admissions[['gre', 'gpa']])

# Plot Estimated Function

plt.scatter(admissions["gpa"], admit_prediction)

The Logit Function

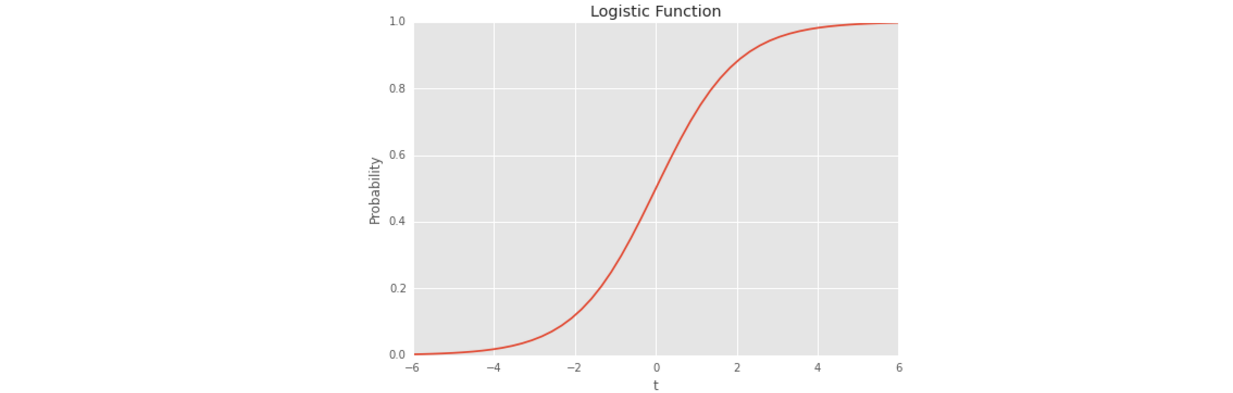

逻辑回归是一个流行的分类方法,它将输出限制在0和1之间。这个输出可以被视为一个给定一组输入某个事件的概率,就像任何其他分类方法。

# Logistic Function

def logit(x):

# np.exp(x) raises x to the exponential power, ie e^x. e ~= 2.71828

return np.exp(x) / (1 + np.exp(x))

# Linspace is as numpy function to produced evenly spaced numbers over a specified interval.

# Create an array with 50 values between -6 and 6 as t

t = np.linspace(-6,6,50, dtype=float)

# Get logistic fits

ylogit = logit(t)

# plot the logistic function

plt.plot(t, ylogit, label="logistic")

plt.ylabel("Probability")

plt.xlabel("t")

plt.title("Logistic Function")

plt.show()

a = logit(-10)

b = logit(10)

'''

a:4.5397868702434395e-05

b:0.99995460213129761

'''

The Logistic Regression

Model Data

from sklearn.linear_model import LogisticRegression

# Randomly shuffle our data for the training and test set

admissions = admissions.loc[np.random.permutation(admissions.index)]

# train with 700 and test with the following 300, split dataset

num_train = 700

data_train = admissions[:num_train]

data_test = admissions[num_train:]

# Fit Logistic regression to admit with gpa and gre as features using the training set

logistic_model = LogisticRegression()

logistic_model.fit(data_train[['gpa', 'gre']], data_train['admit'])

# Print the Models Coefficients

print(logistic_model.coef_)

'''

[[ 0.38004023 0.00791207]]

'''

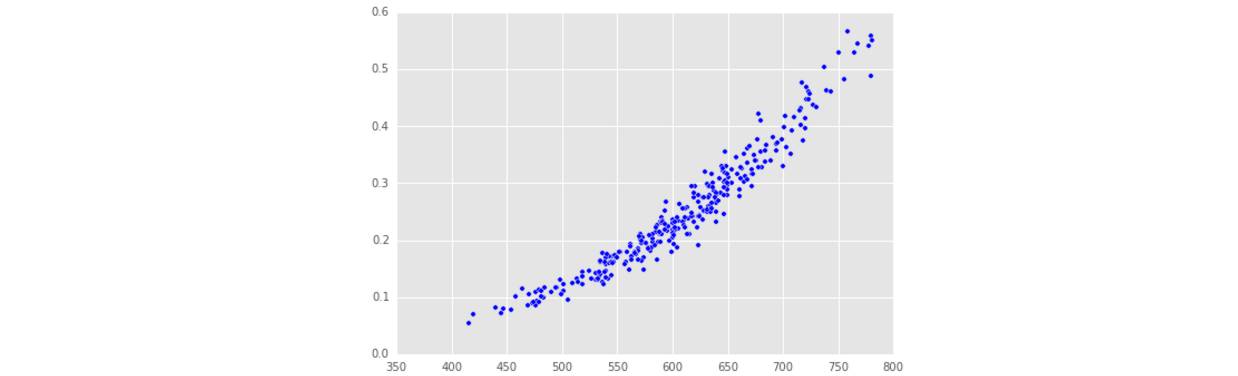

# Predict the chance of admission from those in the training set

fitted_vals = logistic_model.predict_proba(data_train[['gpa', 'gre']])[:,1]

fitted_test = logistic_model.predict_proba(data_test[['gpa', 'gre']])[:,1]

plt.scatter(data_test["gre"], fitted_test)

plt.show()

Predictive Power

# .predict() using a threshold of 0.50 by default

predicted = logistic_model.predict(data_train[['gpa','gre']])

# The average of the binary array will give us the accuracy

accuracy_train = (predicted == data_train['admit']).mean()

# Print the accuracy

print("Accuracy in Training Set = {s}".format(s=accuracy_train))

'''

# 这种输出方式也很好

Accuracy in Training Set = 0.7785714285714286

'''

# Percentage of those admitted

percent_admitted = data_test["admit"].mean() * 100

# Predicted to be admitted

predicted = logistic_model.predict(data_test[['gpa','gre']])

# What proportion of our predictions were true

accuracy_test = (predicted == data_test['admit']).mean()

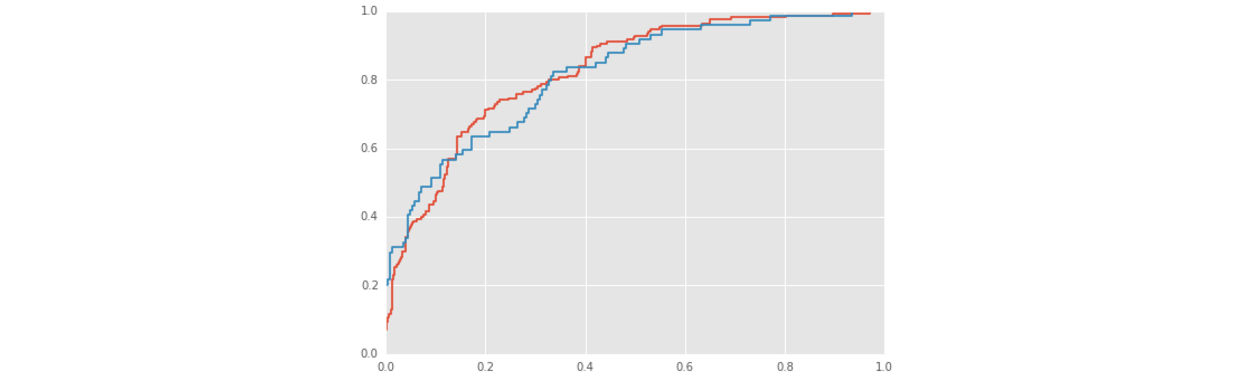

Admissions ROC Curve

from sklearn.metrics import roc_curve, roc_auc_score

# Compute the probabilities predicted by the training and test set

# predict_proba returns probabilies for each class. We want the second column

train_probs = logistic_model.predict_proba(data_train[['gpa', 'gre']])[:,1]

test_probs = logistic_model.predict_proba(data_test[['gpa', 'gre']])[:,1]

# Compute auc for training set

auc_train = roc_auc_score(data_train["admit"], train_probs)

# Compute auc for test set

auc_test = roc_auc_score(data_test["admit"], test_probs)

# Difference in auc values

auc_diff = auc_train - auc_test

# Compute ROC Curves

roc_train = roc_curve(data_train["admit"], train_probs)

roc_test = roc_curve(data_test["admit"], test_probs)

# Plot false positives by true positives

plt.plot(roc_train[0], roc_train[1])

plt.plot(roc_test[0], roc_test[1])

可以看到ROC曲线开始非常的陡峭,慢慢地变得平缓。测试集的AUC值是0.79小于训练集的AUC值0.82,没有过拟合.这些迹象表明我们的模型可以根据gre和gpa来预测是否录取了。

数据挖掘入门与实战

搜索添加微信公众号:datadw

教你机器学习,教你数据挖掘

长按图片,识别二维码,点关注

公众号: weic2c

据分析入门与实战

长按图片,识别二维码,点关注