【导读】ICLR,全称为「International Conference on Learning Representations」(国际学习表征会议),2013 年才刚刚成立了第一届。这个一年一度的会议虽然今年2017年办到第六届,已经被学术研究者们广泛认可,被认为「深度学习的顶级会议」。这个会议由位列深度学习三大巨头之二的 Yoshua Bengio 和 Yann LeCun 牵头创办。Yoshua Bengio 是蒙特利尔大学教授,深度学习三巨头之一,他领导蒙特利尔大学的人工智能实验室(MILA)进行 AI 技术的学术研究。MILA 是世界上最大的人工智能研究中心之一,与谷歌也有着密切的合作。 Yann LeCun 就自不用提,同为深度学习三巨头之一的他现任 Facebook 人工智能研究院(FAIR)院长、纽约大学教授。作为卷积神经网络之父,他为深度学习的发展和创新作出了重要贡献。

ICLR 采用Open Review 评审制度。Open Review 则非常不同,根据规定,所有提交的论文都会公开姓名等信息,并且接受所有同行的评价及提问(open peer review),任何学者都可或匿名或实名地评价论文。而在公开评审结束后,论文作者也能够对论文进行调整和修改。目前 ICLR 的历届所有论文及评审讨论的内容,都完整地保存在 OpenReview.net 上,它也是 ICLR 的官方投稿入口。OpenReview.net 是马萨诸塞大学阿默斯特学院 Andrew McCallum 为 ICLR 2013 牵头创办的一个公开评审系统,秉承公开同行评审、公开发表、公开来源、公开讨论、公开引导、公开推荐、公开 API 及开源等八大原则,得到了 Facebook、Google、NSF 和马萨诸塞大学阿默斯特中心等机构的支持。

以下为论文列表

:

来源:https://openreview.net/group?id=ICLR.cc/2018/Conference

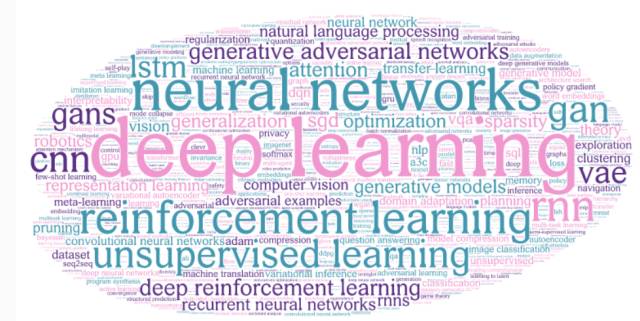

专知进行关键词统计信息如下:

可以看出 深度学习 神经网络 生成式对抗网络、强化学习、循环神经网络等等是投稿论文热点。

论文列表:

《Improving Discriminator-Generator Balance in Generative Adversarial Networks》:

《Placeholder》:

《Complex- and Real-Valued Neural Network Architectures》:

《Revisiting Knowledge Base Embedding as Tensor Decomposition》:

《Tree2Tree Learning with Memory Unit》:

《Combining Model-based and Model-free RL via Multi-step Control Variates》:

《Hyperedge2vec: Distributed Representations for Hyperedges》:

《Deep Complex Networks》:

《OMIE: The Online Mutual Information Estimator》:

《Few-Shot Learning with Variational Homoencoders》:

《Video Action Segmentation with Hybrid Temporal Networks》:

《Learning Efficient Tensor Representations with Ring Structure Networks》:

《Fitting Data Noise in Variational Autoencoder》:

《Bayesian Uncertainty Estimation for Batch Normalized Deep Networks》:

《A Goal-oriented Neural Conversation Model by Self-Play》:

《Automatic Goal Generation for Reinforcement Learning Agents》:

《A novel method to determine the number of latent dimensions with SVD》:

《Universal Agent for Disentangling Environments and Tasks》:

《Covariant Compositional Networks For Learning Graphs》:

《Deep learning mutation prediction enables early stage lung cancer detection in liquid biopsy》:

-

关键词:somatic mutation variant calling cancer liquid biopsy early detection convolution deep learning machine learning lung cancer error suppression mutect

-

下载地址:https://openreview.net/pdf/3da2a17bf6bec5ff1a8f0dd52c100ceb17694e76.pdf

《Learning To Generate Reviews and Discovering Sentiment》:

《Noise-Based Regularizers for Recurrent Neural Networks》:

《Prediction Under Uncertainty with Error Encoding Networks》:

《Genative Entity Networks: Disentangling Entitites and Attributes in Visual Scenes using Partial Natural Language Descriptions》:

《WSNet: Learning Compact and Efficient Networks with Weight Sampling》:

《TD Learning with Constrained Gradients》:

《Improving the Improved Training of Wasserstein GANs》:

《Exploring Representation Methods for Sequence Labeling》:

《Fraternal Dropout》:

《What are image captions made of?》:

《Sequential Coordination of Deep Models for Learning Visual Arithmetic》:

《DETECTING ADVERSARIAL PERTURBATIONS WITH SALIENCY》:

《An inference-based policy gradient method for learning options》:

《Generative Entity Networks: Disentangling Entities and Attributes in Visual Scenes using Partial Natural Language Descriptions》:

《Don’t encrypt the data; just approximate the model \ Towards Secure Transaction and Fair Pricing of Training Data》:

《Alpha-divergence bridges maximum likelihood and reinforcement learning in neural sequence generation》:

《3C-GAN: AN CONDITION-CONTEXT-COMPOSITE GENERATIVE ADVERSARIAL NETWORKS FOR GENERATING IMAGES SEPARATELY》:

《Parametric Information Bottleneck to \Optimize Stochastic Neural Networks》:

《Towards a Testable Notion of Generalization for Generative Adversarial Networks》:

《TOWARDS ROBOT VISION MODULE DEVELOPMENT WITH EXPERIENTIAL ROBOT LEARNING》:

《Variational Bi-LSTMs》:

《Learning an Embedding Space for Transferable Robot Skills》:

《ON MODELING HIERARCHICAL DATA VIA ENCAPSULATION OF PROBABILITY DENSITIES》:

《withdraw》:

《Neural Compositional Denotational Semantics for Question Answering》:

《Model compression via distillation and quantization》:

《Binarized Back-Propagation: Training Binarized Neural Networks with Binarized Gradients》:

《DON’T ENCRYPT THE DATA, JUST APPROXIMATE THE MODEL/ TOWARDS SECURE TRANSACTION AND FAIR PRICING OF TRAINING DATA》:

《Optimal transport maps for distribution preserving operations on latent spaces of Generative Models》:

《Learning Representations for Faster Similarity Search》:

《Maximum a Posteriori Policy Optimisation》: