Image

compression is an important step towards our long-term goals at

WaveOne. We are excited to share some early results of our research

which will appear at ICML 2017. You can find the paper

on arXiv

.

As

of today, WaveOne image compression outperforms all commercial codecs

and research approaches known to us on standard datasets where

comparison is available. Furthermore, with access to a GPU our codec

runs orders of magnitude faster than other recent ML-based solutions:

for example, we typically encode or decode the Kodak dataset at over 100

images per second.

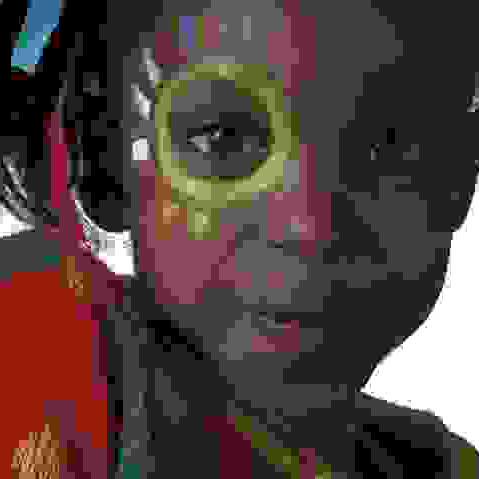

Here is how different image codecs compress an example 480x480 image to a file size of 2.3kB (~0.08 bits per pixel):

JPEG

JPEG 2000

WebP

WaveOne

Now is the time to rethink compression

Even

though over 70% of internet traffic today is digital media, the way

images and video are represented and transmitted has not evolved much in

the past 20 years (apart from

Pied Piper

's

Middle-Out algorithm). It has been challenging for existing commercial

compression algorithms to adapt to the growing demand and the changing

landscape of transmission settings and requirements. The available

codecs are ''one-size-fits-all'': they are hard-coded, and cannot be

customized to particular use cases beyond high-level hyperparameter

tuning.

At the same time, in the last few years, deep learning

has revolutionized a variety of fields, such as machine translation,

object recognition/detection, speech recognition, and photo-realistic

image generation. Even though the world of compression seems a natural

domain for deep learning, it has not yet fully benefited from these

advancements, for two main reasons:

-

Our deep learning

primitives, in their raw forms, are not well-suited for constructing

compact representations. Vanilla autoencoder architectures cannot

compete with the carefully engineered and highly tuned traditional

codecs.

-

It has been particularly challenging to develop a deep learning approach which can run

in real-time

in environments constrained by computation power, memory footprint and battery life.

Fortunately,

the ubiquity of deep learning has been catalyzing the development of

hardware architectures for neural network acceleration. In the years

ahead, we foresee dramatic improvements in speed, power consumption and

widespread availability of neural network hardware, and this will enable

the proliferation of codecs based on deep learning.

In the paper, we present progress on both performance and computational feasibility of ML-based image compression.

WaveOne compression performance

Here are some more detailed results from our paper (click to enlarge):

Performance on the Kodak PhotoCD dataset measured in the RGB domain

(dataset and colorspace chosen to enable comparison to other approaches; see the paper for more extensive results). The

plot on the left

presents average reconstruction quality as function of the number of bits per pixel fixed for each image. The

plot on the right

shows average compressed file sizes relative to ours for different target MS-SSIM values.

Real-time

performance is critically important to us, and we carefully designed

and optimized our architecture to make things fast. Here's the raw

encode/decode performance:

Average times to encode and decode

images from the

RAISE-1k

512×768 dataset using the WaveOne codec on the

NVIDIA GTX 980 Ti GPU and batch size of 1.

While

we are slightly faster than JPEG (libjpeg) and significantly faster

than JPEG 2000, WebP and BPG, our codec runs on a GPU and traditional

codecs do not — so we do not show this comparison. We also don't have

access to the full code of recent ML approaches to compare.

Domain-adaptive compression

Here

is an interesting tidbit not discussed in the paper. Since our

compression algorithm is learned rather than hard-coded, we can easily

train codecs custom-tailored to specific domains. This enables capturing

particular structure that the traditional one-size-fits-all codecs

would not be able to characterize.

To illustrate this, we created a

custom codec specifically to compress aerial views. To do this, we only

replaced our training dataset: we preserved exactly the same

architecture and training procedure we followed for our generic image

codec. This aerial codec was able to achieve another 20% boost in

compression over our generic codec on our aerial view test set.

JPEG

JPEG 2000

WaveOne Aerial

Crops from reconstructions by different codecs for a target file size of 6kB (or equivalently 0.11 bits per pixel).

WebP

is not shown as it was not able to generate files of such a small

bitrate. The WaveOne codec was custom-trained on aerial views to capture

characteristic structure.

链接:

http://www.wave.one/icml2017

原文链接:

http://weibo.com/1715118170/F3JjAdhEx?ref=home&rid=4_0_202_2778228337377857517&type=comment