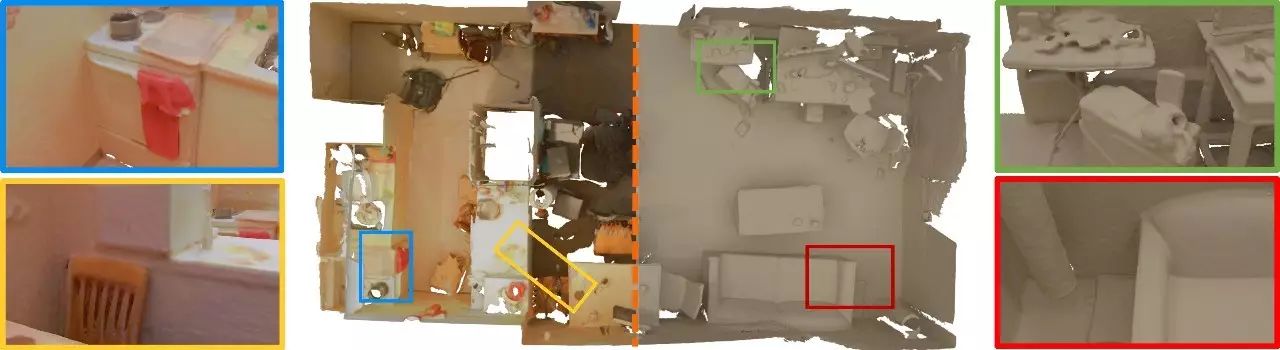

通过扫描实时构建出高精细度3D模型,斯坦福大学、微软研究院和德国mpii共同开发的BundleFusion:实时全局一致的3D重建,使用动态表面重新整合技术。论文《BundleFusion: Real-time Globally Consistent 3D Reconstruction using Online Surface Re-integration》

实时,高品质,大尺寸场景的3D扫描是混合现实和机器人应用的关键。然而,可扩展性带来了姿态估计漂移的挑战,在累积模型中引入了显着的错误。方法通常需要几个小时的离线处理来全局纠正模型错误。最近的在线方法证明了令人信服的结果,但遭受以下缺点:(1)需要几分钟的时间才能执行在线修正,影响了真正的实时使用;

(2)脆弱的帧到帧(或帧到模型)姿态估计导致许多跟踪失败;或(3)仅支持非结构化的基于点的表示,这限制了扫描质量和适用性。我们通过一个新颖的,实时的端对端重建框架来系统地解决这些问题。其核心是强大的姿态估计策略,通过考虑具有高效分层方法的RGB-D输入的完整历史,针对全局摄像机姿态优化每帧。我们消除了对时间跟踪的严重依赖,并且不断地将其定位到全局优化的帧。我们提出了一个可并行化的优化框架,它采用基于稀疏特征和密集几何和光度匹配的对应关系。我们的方法估计全局最优化(即,束调整的姿势)实时,支持从总跟踪故障(即重新定位)恢复的鲁棒跟踪,并实时重新估计3D模型以确保全局一致性;都在一个框架内。我们优于最先进的在线系统,质量与离线方法相同,但速度和扫描速度前所未有。我们的框架导致尽可能简单的扫描,使用方便和高质量的结果。

摘要:

Real-time, high-quality, 3D scanning of large-scale scenes is key to

mixed reality and robotic applications.

However, scalability brings challenges of drift in pose estimation,

introducing significant errors in the accumulated model.

Approaches often require hours of offline processing to globally

correct model errors. Recent online methods demonstrate compelling

results, but suffer from: (1) needing minutes to perform online

correction preventing true real-time use; (2) brittle frame-to-frame (or

frame-to-model) pose estimation resulting in many tracking failures; or

(3) supporting only unstructured point-based representations, which

limit scan quality and applicability.

We systematically address these issues with a novel, real-time,

end-to-end reconstruction framework.

At its core is a robust pose estimation strategy, optimizing per frame

for a global set of camera poses by considering the complete history of

RGB-D input with an efficient hierarchical approach.

We remove the heavy reliance on temporal tracking, and continually

localize to the globally optimized frames instead.

We contribute a parallelizable optimization framework, which employs

correspondences based on sparse features and dense geometric and

photometric matching. Our approach estimates globally optimized (i.e.,

bundle adjusted poses) in real-time, supports robust tracking with

recovery from gross tracking failures (i.e., relocalization), and

re-estimates the 3D model in real-time to ensure global consistency; all

within a single framework.

We outperform state-of-the-art online systems with quality on par to

offline methods, but with unprecedented speed and scan completeness.

Our framework leads to as-simple-as-possible scanning, enabling ease

of use and high-quality results.

项目主页:

http://graphics.stanford.edu/projects/bundlefusion/

原文链接:

http://weibo.com/5501429448/F6zjHmd5B?ref=home&rid=2_0_202_2666926733913912891&type=comment